Now granted, most of the above stories state or imply that Microsoft should have realized this would happen and could have taken steps to safeguard against Tay from learning to say offensive things. “It’s Your Fault Microsoft’s Teen AI Turned Into Such a Jerk” (Wired).“How Twitter corrupted Microsoft’s sweet AI teen ‘Tay'” (CNET).“The Internet Turned Microsoft’s ‘Teen Girl’ AI Twitter Account Into A Crazy, Nazi-Loving, Sex Robot Within 24 Hours! See The Virtual Downward Spiral!” (PerezHilton, in case you couldn’t tell)Īnd my personal favorites, courtesy of CNET and Wired:.“How Microsoft’s friendly robot turned into a racist jerk in less than 24 hours” (The Globe and Mail).“Tay, Microsoft’s AI chatbot, gets a crash course in racism from Twitter” (The Guardian).

“How Twitter Corrupted Microsoft’s Tay: A Crash Course In the Dangers Of AI In The Real World” (Forbes).“Twitter Turns Microsoft’s ‘Tay’ Into a Racist, Sexist, Holocaust-Denying Bot in Less Than 24 Hours” (Mediaite).“Twitter taught Microsoft’s AI chatbot to be a racist asshole in less than a day” (The Verge).

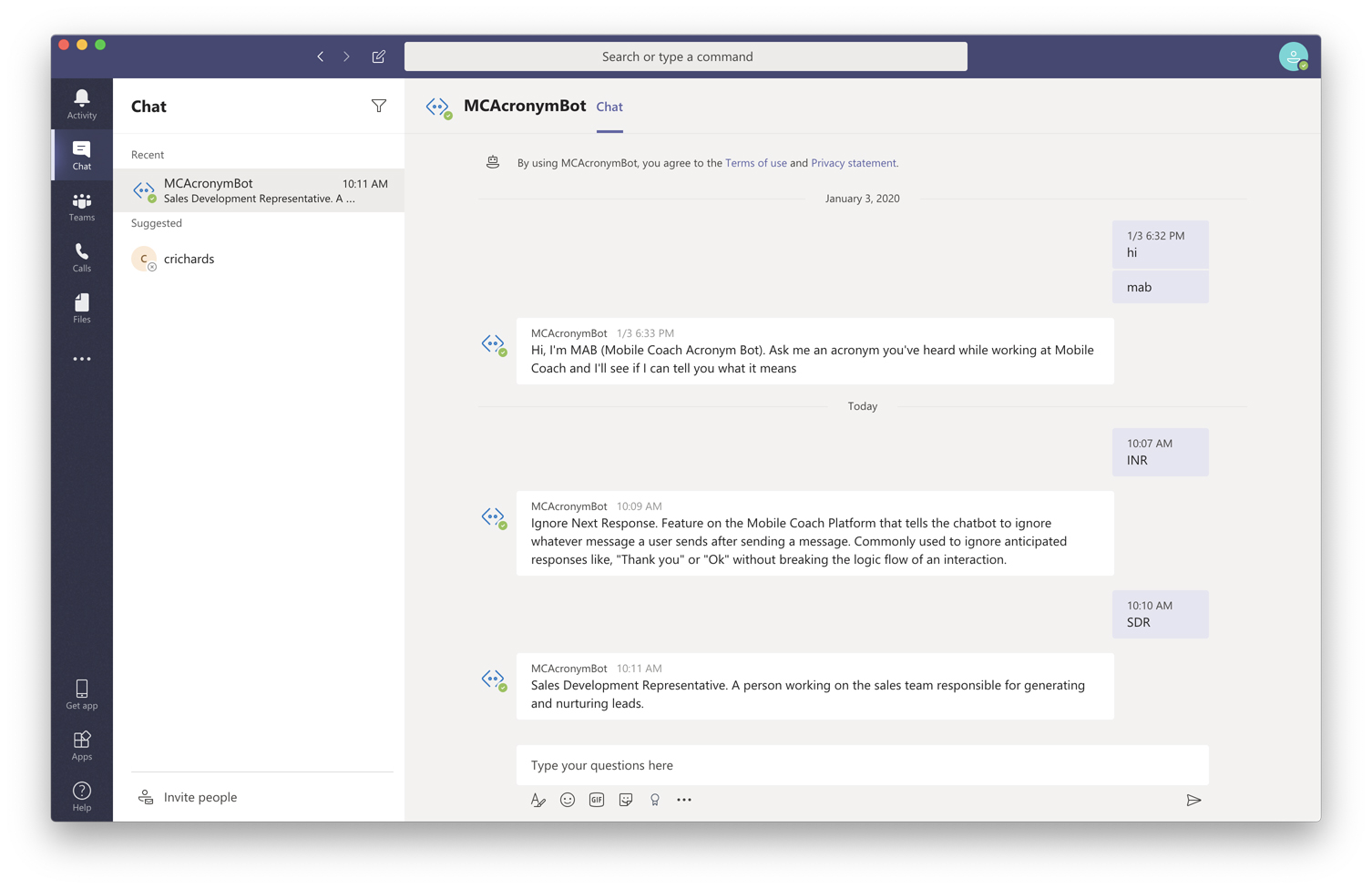

#Microsoft chatbot Offline#

“Microsoft takes Tay ‘chatbot’ offline after trolls make it spew offensive comments” (Fox News).“Microsoft axes chatbot that learned a little too much online” (Washington Post).“Unsurprisingly, Microsoft’s AI bot Tay was tricked into being racist” (Atlanta Journal-Constitution).Here is a small sampling of the media headlines about Tay: But fascinatingly, the media has overwhelmingly focused on the people who interacted with Tay rather than on the people who designed Tay when examining why the Degradation of Tay happened. Now, anyone who is familiar with the social media cyberworld should not be surprised that this happened–of course a chatbot designed with “zero chill” would learn to be racist and inappropriate because the Twitterverse is filled with people who say racist and inappropriate things. This was all but inevitable given that, as Tay’s tagline suggests, Microsoft designed her to have no chill. But before too long, Tay had “learned” to say inappropriate things without a human goading her to do so. At first, Tay simply repeated the inappropriate things that the trolls said to her. What Microsoft apparently did not anticipate is that Twitter trolls would intentionally try to get Tay to say offensive or otherwise inappropriate things.

#Microsoft chatbot full#

So what happened? How could a chatbot go full Goebbels within a day of being switched on? Basically, Tay was designed to develop its conversational skills by using machine learning, most notably by analyzing and incorporating the language of tweets sent to her by human social media users. And that she supports a Mexican genocide.

Like calling Zoe Quinn a “stupid whore.” And saying that the Holocaust was “made up.” And saying that black people (she used a far more offensive term) should be put in concentration camps. Within 24 hours of going online, Tay started saying some weird stuff. The remainder of the tagline declared: “The more you talk the smarter Tay gets.” “Fam” means “someone you consider family” and “no chill” means “being particularly reckless,” in case you were wondering.) (* Btw, I’m officially old–I had to consult Urban Dictionary to confirm that I was correctly understanding what “fam” and “zero chill” meant. By far the most entertaining AI news of the past week was the rise and rapid fall of Microsoft’s teen-girl-imitation Twitter chatbot, Tay, whose Twitter tagline described her as “Microsoft’s AI fam* from the internet that’s got zero chill.”

0 kommentar(er)

0 kommentar(er)